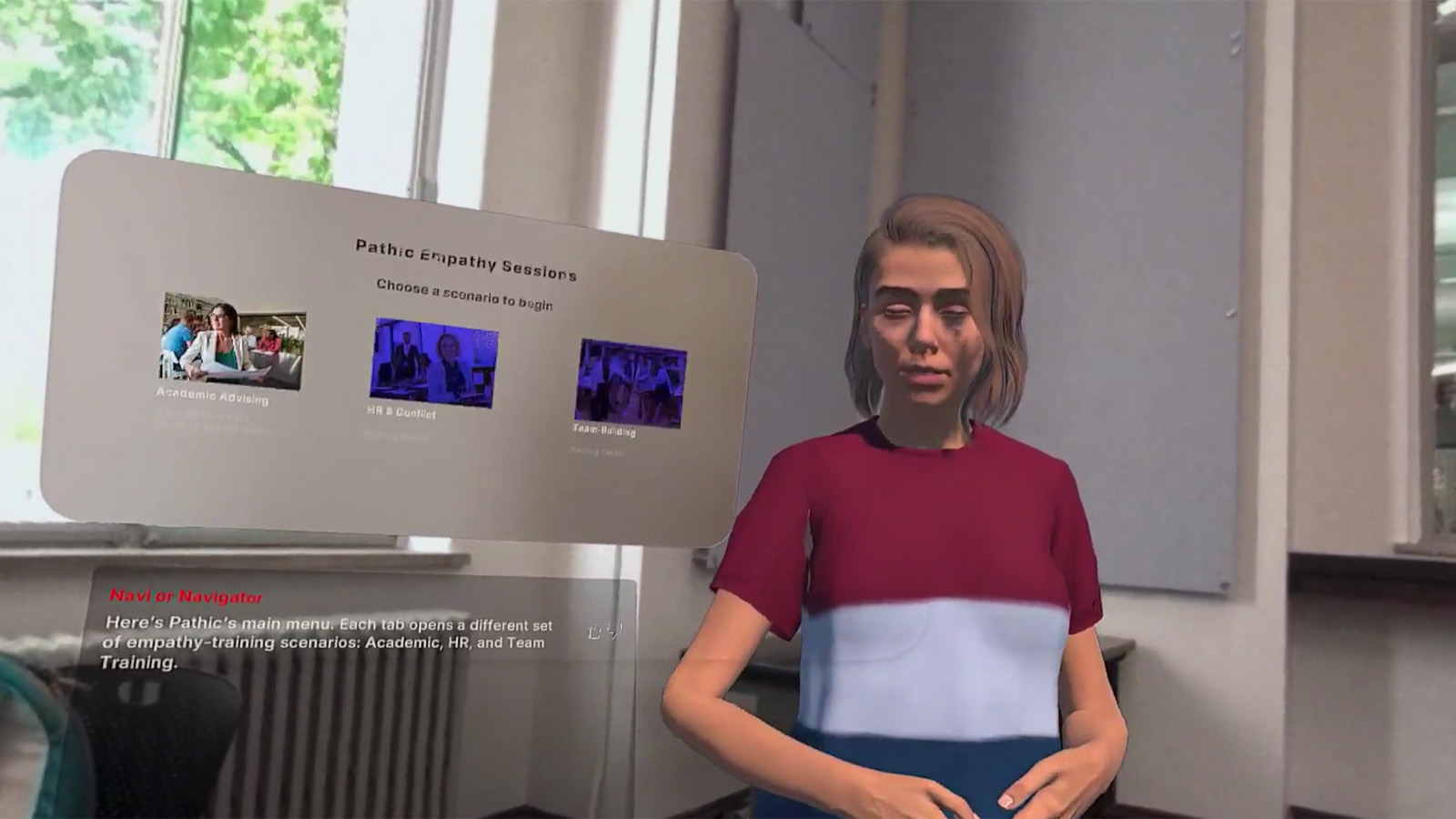

Pathic MR

An empathy-training Mixed Reality system that enables users to understand conflict dynamics, emotional nuance, and perspective-taking through spatially anchored AI-driven conversations. Pathic MR transforms abstract empathy concepts into embodied experiences, users don't just learn about perspective-taking, they physically inhabit another person's viewpoint.

"Almost all stakeholders identified the critical importance of stepping into the other's shoes."

— Research Finding

This project represents the convergence of spatial computing, conversational AI, and human-centered design to address one of the most persistent challenges in human interaction: the failure to truly understand another person's experience.

- Role Lead Researcher & XR Developer

- Timeline Mar 2025 – May 2025

- Tech Stack Unity, Meta Quest 3, XR Interaction Toolkit, Convai AI

- Research 15 Stakeholder Interviews

- Status Research Prototype Complete

Core Capabilities

Pathic MR is a Mixed Reality empathy training platform built on Meta Quest 3 that uses spatially anchored AI characters, emotionally responsive dialogue, and role-shifting mechanics to create genuine perspective-taking experiences.

Spatially Anchored AI

AI characters powered by Unity and XR Interaction Toolkit, positioned in meaningful spatial relationships with the user.

Emotional Dialogue Engine

Convai-powered responses with custom emotion-scaffolding prompts for empathetically intelligent dialogue.

Role-Shifting System

Experience conversations as yourself OR as the other person, creating genuine understanding, not just intellectual agreement.

360° Scene-Based Scenarios

Empathy scenarios grounded in real conflict patterns across workplace, academic, and relationship contexts.

Gaze-Triggered Transitions

Real-time narrative transitions based on where users look, natural interaction flow without controller dependency.

Voice-First UX

Hands-free, embodied interaction enabling natural conversation without breaking immersion.

Problem Landscape

Miscommunication is the root of most interpersonal conflicts. People struggle to see others' perspectives when emotions escalate, precisely the moments when perspective-taking matters most.

The gap between knowing you should empathize and actually feeling another person's experience creates a persistent barrier to conflict resolution.

Why Current Training Fails

- • Role-playing exercises feel artificial

- • Theoretical discussions don't create emotional memory

- • Organizations lack real-time training tools

- • Static, scripted, non-interactive approaches

"People often fail to recognize how their tone or assumptions escalate conflicts."

— Stakeholder Research

"Training works only when it feels real, not theoretical."

— Stakeholder Research

Research & Discovery

The research foundation combined multiple methodologies to understand both the problem space and design requirements.

Research Methods

- • Literature review: HCI, spatial computing, empathy research

- • Contextual inquiry: 15 stakeholders across 4 sectors

- • Scenario analysis: common conflict archetypes

- • Conversational testing: scripted and unscripted

- • Cognitive load assessment

- • Iterative prototype testing

Key Findings

- • Users need emotional validation before solutions

- • Spatial placement affects perceived safety and trust

- • Voice-first interactions reduce cognitive load

- • Emotionally dynamic avatars outperform static ones

- • Subtle gestures beat theatrical animation

Research Themes That Shaped Design

"Emotional mirroring enhances trust."

"Gaze as input improves immersion."

"Empathy requires vulnerability and control."

Personas & Scenarios

Stakeholder research revealed three primary user archetypes, each facing distinct conflict challenges:

The Overwhelmed Student

Struggles with academic pressure and peer relationships. Needs conflict resolution for power dynamics with professors and collaboration tensions.

Pain: Traditional training feels disconnected from actual experiences.

The Frustrated Employee

Navigates workplace tensions. Needs constructive communication patterns for disagreements about work allocation and feedback.

Pain: Corporate training feels performative rather than practical.

The Conflicted Partner

Struggles to express needs without triggering defensiveness. Needs safe space to practice difficult conversations.

Pain: Therapy concepts don't translate to embodied skill-building.

Core Scenario Types

- • Academic advising conflicts (power dynamics)

- • Workplace tension (feedback, deadlines, team dynamics)

- • Relationship misunderstandings (communication styles)

- • Social misinterpretations (tone and context)

- • Emotional blindspots in everyday life

System Architecture

Input Layer

- • Voice input capture

- • Gaze raycasts

- • Spatial XR interactions

- • Proximity detection

Interpretation

- • Convai LLM processing

- • Emotion classifier

- • Narrative state logic

- • Branch management

Response Gen

- • Real-time avatar speech

- • Facial expressions

- • State-driven animations

- • Natural prosody

Spatial Layer

- • Room-scale MR layout

- • Passthrough grounding

- • Anchored placement

- • Proximity transitions

Technical Implementation

Unity + XR Toolkit

- • Meta Quest 3 passthrough for physical grounding

- • Gaze raycasting for attention-aware interactions

- • Spatial anchors for consistent positioning

- • Timeline-based state transitions

- • Natural conversational distances

Convai Integration

- • Node-based conversational graph

- • LLM emotional shaping per scenario

- • Response overrides for edge cases

- • Safety filtering

- • Custom "emotion scaffolding" prompts

Emotional Model

- • User tone detection → avatar animation

- • Plutchik's emotion wheel for nuance

- • Empathy escalation/de-escalation logic

- • Intensity adjustment based on responses

Voice UX

- • Latency handling for natural feel

- • Turn-taking and interruption management

- • Interruptible responses

- • Confidence fallback ("Let me rephrase…")

Design Rationale

Spatial Placement = Emotional Safety

Where an avatar stands changes power dynamics. Too close feels threatening; too far feels disconnected. System dynamically adjusts positioning based on conversation phase and emotional intensity.

Micro-Expressions Over Theatrics

Early prototypes used theatrical animation that broke immersion. "Emotional attunement requires subtlety." Final system uses understated facial animation that reads as natural.

Embodiment Creates Understanding

Generic scenarios don't create emotional memory. System grounds conflicts in recognizable contexts users can map to their own experiences. Physical presence creates visceral understanding.

Vulnerability + Safety

Empathy training requires genuine discomfort, that discomfort is the learning. But system must never overwhelm. Careful pacing, user control, and clear exit paths maintain safety.

Challenges & Breakthroughs

Challenges Faced

- ✗ Avatars responding too quickly felt robotic

- ✗ Overly complex gestures broke immersion

- ✗ Narrative branches looping incorrectly

- ✗ Gaze not triggering transitions consistently

- ✗ Emotional tone mismatches (uncanny valley)

- ✗ Cognitive overload during longer scenes

Breakthroughs

- ✓ Spatial anchor realignment solved drift

- ✓ Emotion-driven narrative pacing

- ✓ Role-shift mechanic validated, users report genuine perspective shifts

- ✓ Voice-recognition stability achieved

- ✓ Avatar gaze correction improved presence

Lessons Learned

- • Emotional UX is harder than technical UX

- • Timing and pacing define emotional tone more than content

- • Conversation must adapt, not dominate

- • Safety is foundational, control enables vulnerability

Final Solution

Pathic MR delivers a complete empathy training experience: fully interactive AI-driven MR conversations that respond dynamically to user input and emotional state.

Role-Shifting Perspective

Experience the same conflict from multiple viewpoints, genuine understanding, not intellectual agreement.

Emotional Mirroring

Avatars respond with appropriate nuance to user behavior throughout extended training sessions.

Adaptive Simulation

Adjusts difficulty and intensity based on user responses and training objectives.

Future Roadmap

Immediate

User testing with target populations: students, employees, relationship partners, to validate empathy training effectiveness.

Near-Term

- • Emotion-driven scenario authoring tool

- • Multi-avatar team interactions

- • Biometric emotional markers

Long-Term Vision

- • Multi-user MR training

- • AI conflict pattern prediction

- • Institutional integration (HR, schools, healthcare)

Reflections

Pathic MR taught me that designing for emotion is fundamentally different from designing for utility. Technical systems succeed when they work reliably. Emotional systems succeed when they feel authentic, and authenticity emerges from restraint, timing, and subtlety.

Working with conversational AI revealed how much human communication depends on non-verbal cues that language models don't capture. The challenge wasn't generating plausible text, Convai handles that well. The challenge was ensuring that generated text, avatar animation, spatial positioning, and pacing all aligned to create a unified emotional experience.

This project represents the merger of research, engineering, and design that I believe defines next-generation product development. I conducted primary research, engineered complex technical systems, and designed experiences grounded in emotional intelligence, demonstrating these aren't separate disciplines but integrated skills.

Core Insight

Empathy can be trained, but training requires embodiment. Telling people to understand others doesn't work. Placing them in another person's position, literally, spatially, conversationally, creates understanding that persists.