NeuroNav

A real-time vehicle detection and multimodal safety alert system designed for blind and visually impaired pedestrians. NeuroNav transforms a smartphone into an "electronic eye" using on-device YOLOv8 computer vision, communicating vehicle distance, direction, type, and urgency through synchronized voice announcements, haptic patterns, and optional audio tones, all running at 10 FPS without internet connectivity to preserve privacy and ensure reliability when it matters most. This case study documents Phase 1: validating the voice-first, multimodal human-AI interface with blind users before proceeding to perception model benchmarking and field trials.

"This would boost my confidence walking around outside."

— Participant P6, Iowa Department for the Blind User Testing

This project represents my deepest exploration of trust calibration in safety-critical AI systems, where a single false negative could mean a life-threatening situation, and where the humans I'm designing for have fundamentally different sensory relationships with the world than sighted designers typically consider. Phase 1 answers a critical go/no-go question: can blind users understand, trust, customize, and act on AI-generated safety signals? The computer vision pipeline is implemented and operational; this phase validates the human-AI interaction layer that will determine whether the perception system is even adoptable before investing in large-scale model benchmarking and field reliability studies.

- Role Lead Designer & Developer

- Project Type Master of Science Capstone Project

- Timeline March 2025 – Dec 2025 (5 months)

- Tech Stack Unity 6 LTS, C#, AR Foundation, YOLOv8, Barracuda Inference

- Collaborators Iowa Department for the Blind (Testing Partner)

- Status Phase 1 Complete • Interface Validated • Ready for Field Trials

- GitHub View Repository →

The Problem

Electric and hybrid vehicles have created an invisible crisis for blind pedestrians. Research documents a 37% increase in pedestrian-vehicle accidents involving blind individuals when comparing electric vehicles to traditional combustion engines.

The physics are simple but deadly: electric vehicles are nearly silent at low speeds, exactly the speeds at which pedestrians cross streets, navigate parking lots, and move through intersections.

The blind community has developed remarkable compensatory skills, echolocation, traffic pattern recognition, environmental sound mapping, but electric vehicles have fundamentally broken these survival strategies.

User Voices from Research

"Those stupid quiet cars… you think it's safe, then zap."

"If it's electric, I won't even know it's there."

"I've stepped out thinking it's safe when it really wasn't."

The Gap

Existing assistive navigation tools focus on wayfinding and obstacle avoidance, but none address the specific, time-critical challenge of detecting silent, moving vehicles. The gap isn't just a feature missing from an app, it's a safety void that costs lives.

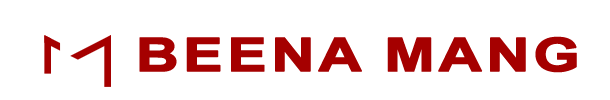

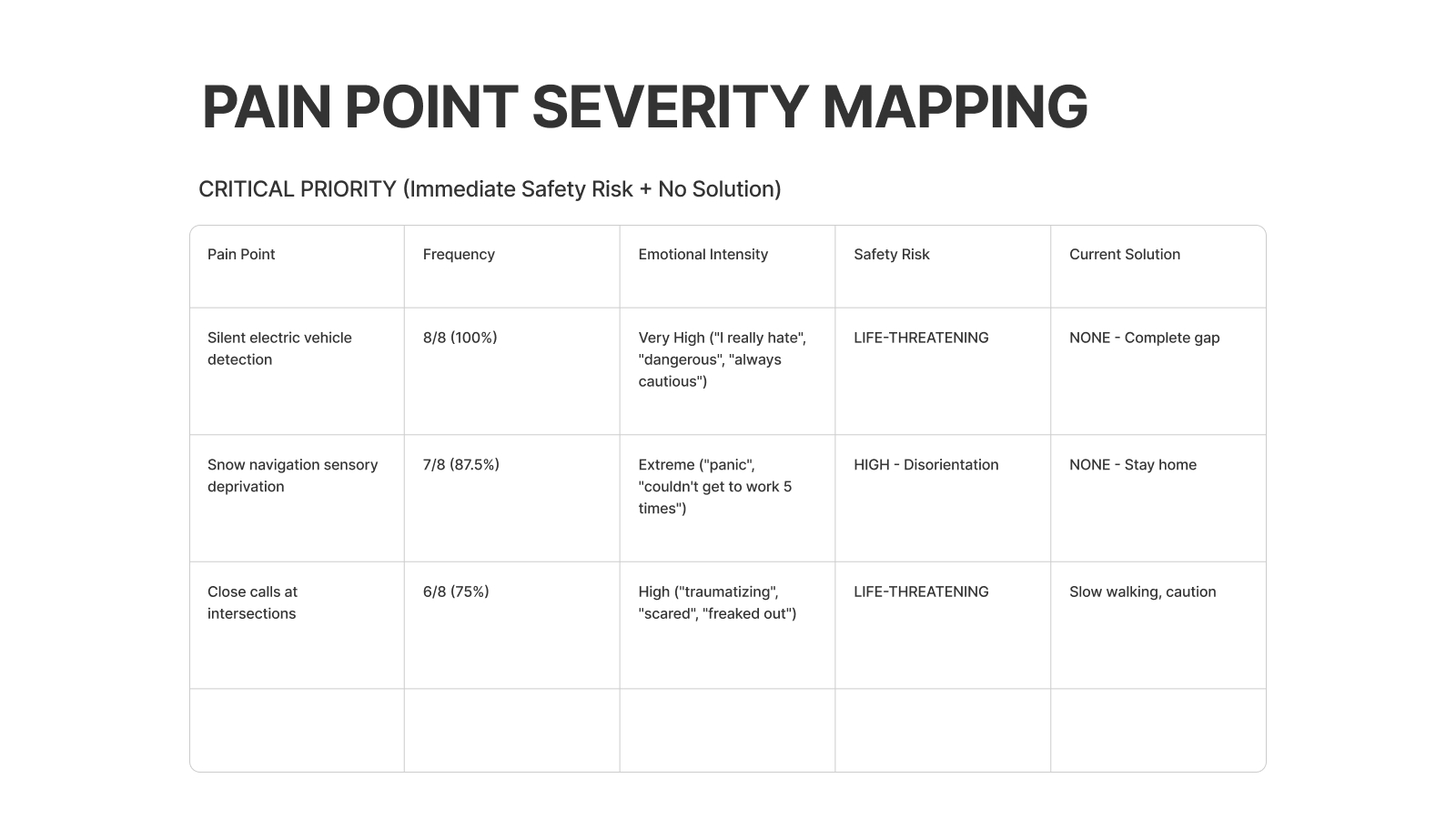

Pain Point Severity Mapping (8 Participants)

To understand which problems were truly safety-critical versus merely frustrating, I mapped every reported breakdown by frequency, emotional intensity, and current workaround. Three issues emerged as life-threatening with no effective solution: silent electric vehicles, snow-related sensory deprivation, and close calls at intersections.

- • Silent electric vehicle detection was mentioned by 8/8 participants (100%) and consistently described as 'dangerous', 'terrifying', and 'always on my mind.'

- • Snow conditions caused complete navigation breakdowns for 7/8 participants; several reported being unable to get to work multiple times.

- • Close calls at intersections were common (6/8), but users had no better strategy than 'walk slower' or 'be extra careful',which does not scale when traffic is unpredictable.

- • Other high-priority gaps like GPS lag, overhead obstacles, turn lane prediction, and uncontrolled crossings informed second-wave features beyond the vehicle detector MVP.

Why This Matters: A Systems Perspective

This isn't just a product design challenge, it's a systems design problem with cascading implications across technology, accessibility, urban planning, and social equity. The automotive industry's shift to electric vehicles, while environmentally beneficial, has created an unintended accessibility crisis.

Where NeuroNav Sits in the Current Landscape

Most existing tools for blind navigation fall into two extremes: generic GPS routing (Google/Apple Maps) or on-demand visual description (Be My Eyes, Seeing AI). None are designed as a proactive, always-on safety layer for real-time vehicle detection.

Our unique position:

- • Only concept explicitly targeting the electric vehicle silence crisis.

- • Proactive hazard monitoring instead of reactive photo-taking.

- • Architected around sub-500ms latency and 99%+ accuracy expectations, reflecting safety-critical rather than convenience-oriented design.

- • Built with blind users from day one, making trust and co-design a core moat, not an afterthought.

Core User Needs Identified

Instant Detection

Gradual urgency indicators that communicate threat level in real-time as vehicles approach.

Absolute Reliability

One false negative destroys trust entirely. Users need a system they can depend on completely.

Independence Without Fear

Freedom to navigate public spaces with confidence, not constant anxiety.

Multimodal Alerts

Feedback that works across different environmental conditions, noise, weather, context.

Hands-Free Interaction

Doesn't interfere with cane, guide dog, or existing navigation strategies.

Sensory Prosthetic

Extending human perception into a domain where biological sensing has been rendered obsolete.

Research & Contextual Inquiry

I conducted 8 contextual inquiry interviews with blind and visually impaired participants, observing their navigation strategies in real environments and documenting the breakdowns they experience daily.

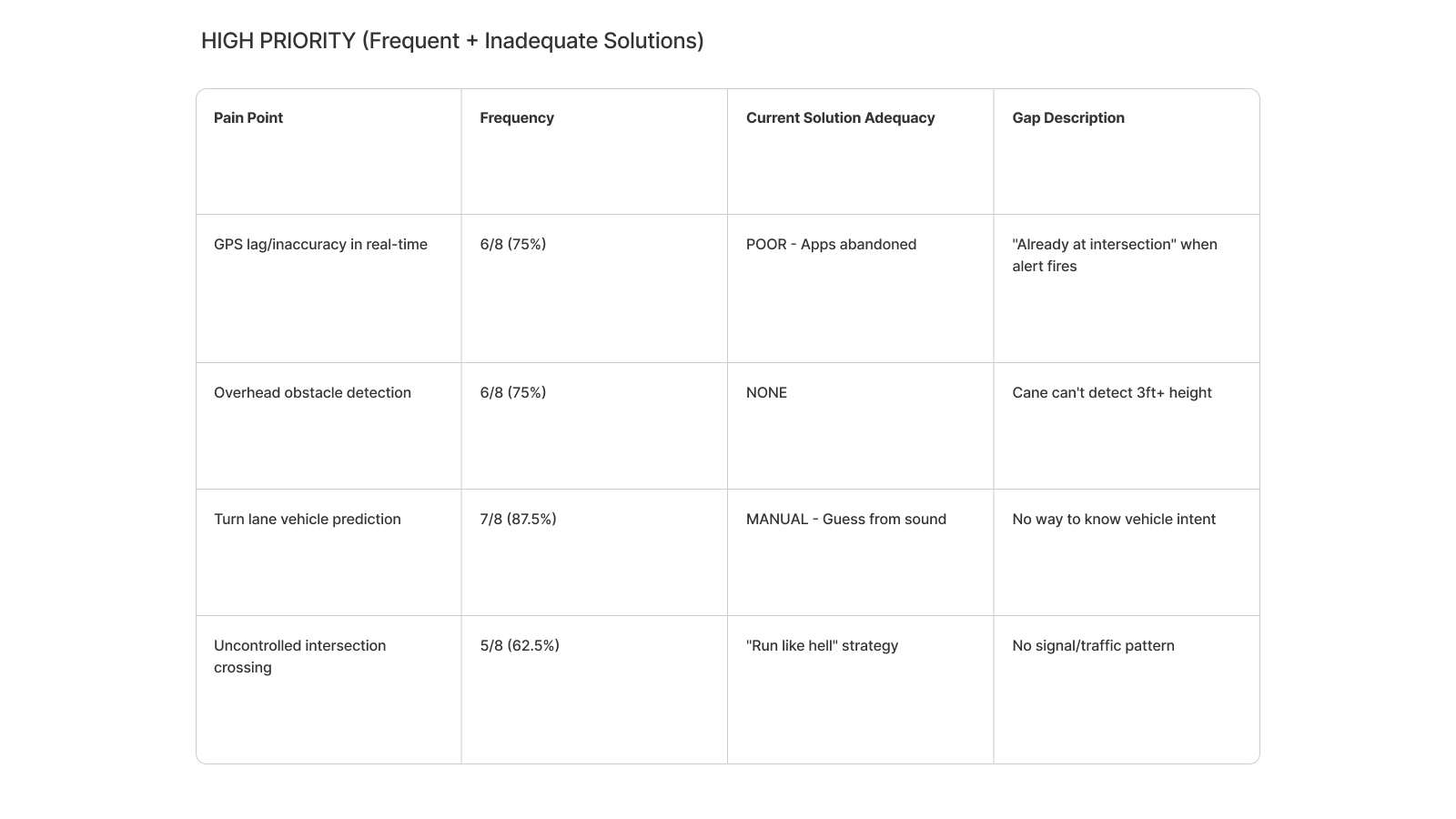

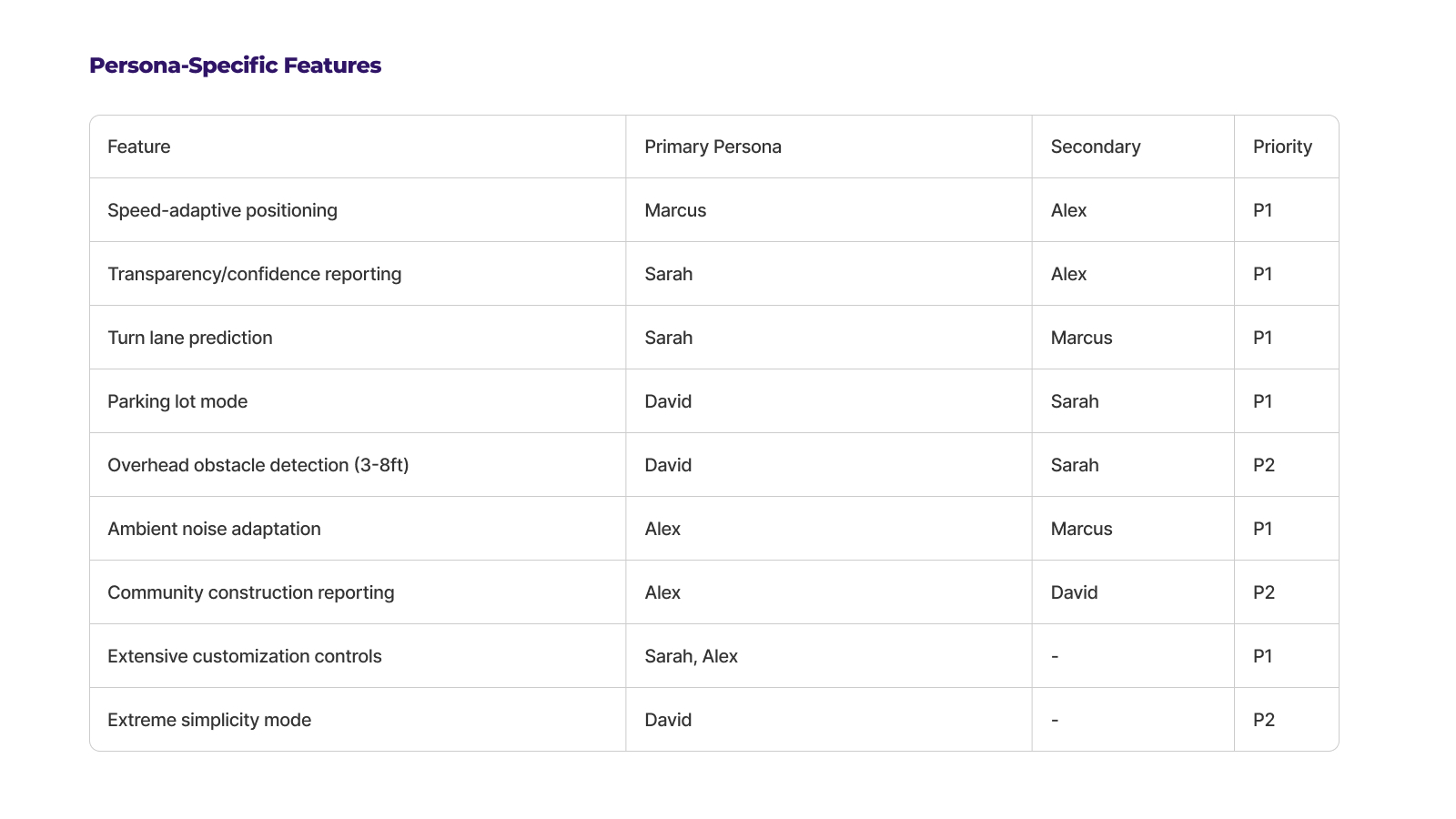

Four Anchor Personas

From the 8 participants, I synthesized four anchor personas that represent distinct safety profiles and design tensions. Each major design decision can be traced back to one of these people.

- • Marcus – The Silent Vehicle Survivor. Hard-of-hearing cane user who relies almost entirely on traffic sound patterns. Electric and hybrid vehicles 'appear out of nowhere' because they make no sound. For Marcus, NeuroNav must detect vehicles he cannot hear and prioritize clear directional alerts over rich scene descriptions.

- • Sarah – The Zero-Tolerance Skeptic. Legally blind professional with no peripheral vision who has already been hit by a vehicle at a crosswalk. A single false negative means permanent abandonment. NeuroNav must surface transparent confidence levels, err on the side of caution, and give her the ability to override and customize behavior.

- • David – The Overhead Obstacle Magnet. Regularly hits his head on low branches, overhangs, and construction equipment because his cane only detects ground-level hazards. He needs simple, early warnings like "low branch ahead," not verbose narratives. For David, NeuroNav's long-term roadmap includes overhead obstacle detection and parking-lot mode.

- • Alex – The Construction Zone Navigator. Lives in a neighborhood with constant construction and daily route changes. Sidewalks close overnight; detours appear with no warning. Alex wants a way to both receive and contribute real-time construction reports so that blind pedestrians can warn each other instead of discovering obstacles with their bodies.

Critical Breakdowns Observed

- • Silent electric vehicles approaching from any direction

- • Turning vehicles that change trajectory unpredictably

- • Weather conditions (rain, wind) masking audio cues

- • Multi-lane confusion at complex intersections

- • Complete inability to judge vehicle distance, speed, or trajectory

User-Expressed Requirements

- • Detection accuracy of at least 90%

- • False positive rates below 10%

- • Combined haptic and sound alerts

- • Simple, learnable interface: "simplicity is king"

- • Functionality in adverse conditions

- • Offline capability for poor connectivity areas

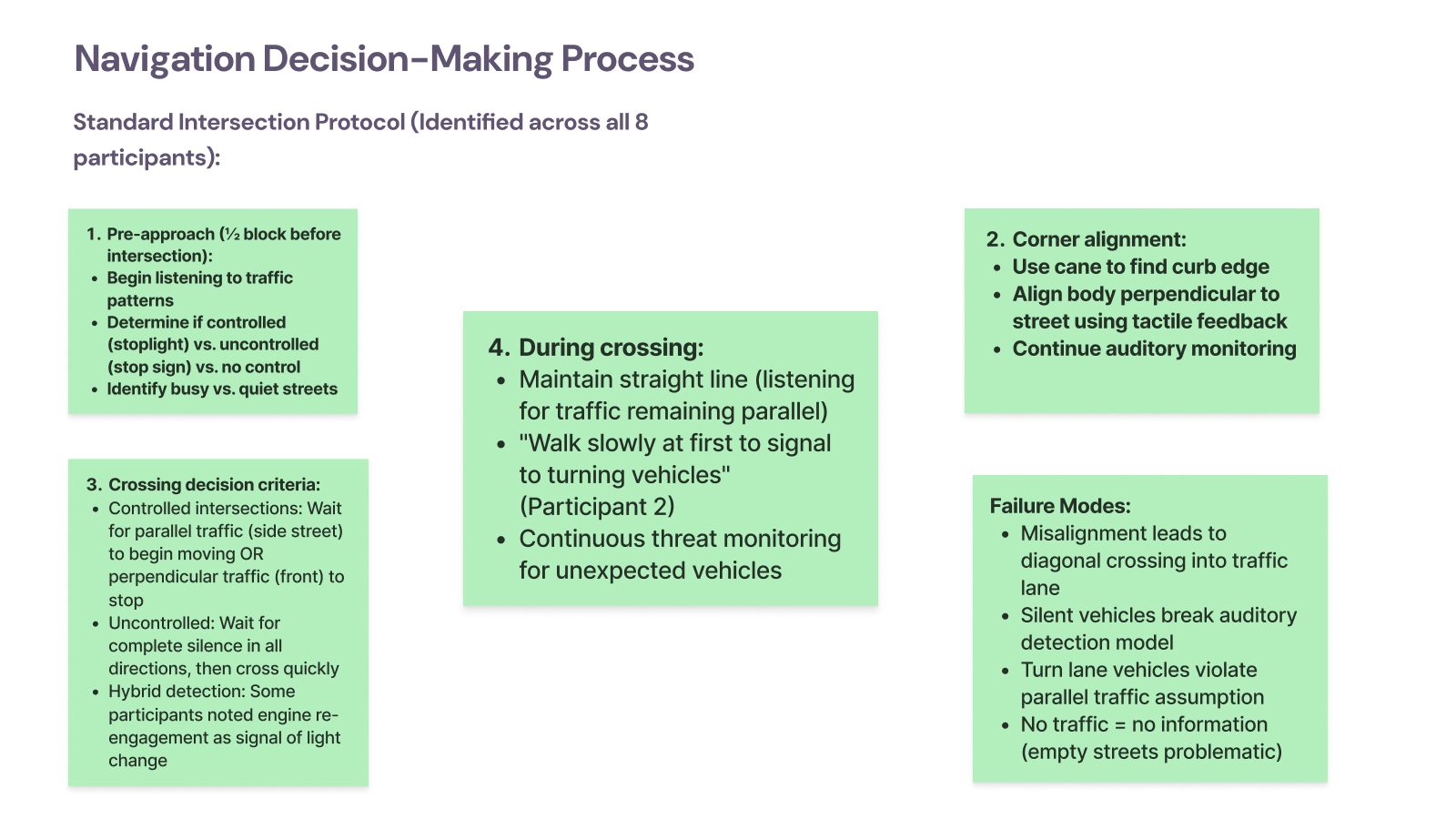

How Blind Pedestrians Actually Cross Intersections

Shadowing participants at real intersections revealed a surprisingly structured mental model for crossing: a four-stage protocol that breaks down precisely where silent vehicles and turn lanes appear.

- Pre-approach (½ block away): Begin listening for traffic patterns; determine if the intersection is controlled (signal/stop sign) or uncontrolled; classify streets as busy vs. quiet.

- Corner alignment: Use the cane to find the curb edge, align the body perpendicular to the street using tactile feedback, and continue monitoring sound.

- Crossing decision:

- • Controlled intersections: wait for parallel traffic to start moving or for perpendicular traffic to stop.

- • Uncontrolled: wait for complete silence in all directions, then cross quickly.

- • Hybrid: some participants used engine re-engagement as an informal signal of light change.

- During crossing: Maintain a straight line using traffic as an auditory reference, walk slowly at first to signal turning vehicles, and continuously monitor for unexpected vehicles.

Observed failure modes:

- • Misalignment leading to diagonal crossing into the traffic lane.

- • Silent vehicles breaking the entire auditory detection model.

- • Turn lane vehicles violating the assumption that parallel traffic is safe.

- • Empty streets providing no information, making "quiet" intersections feel most dangerous.

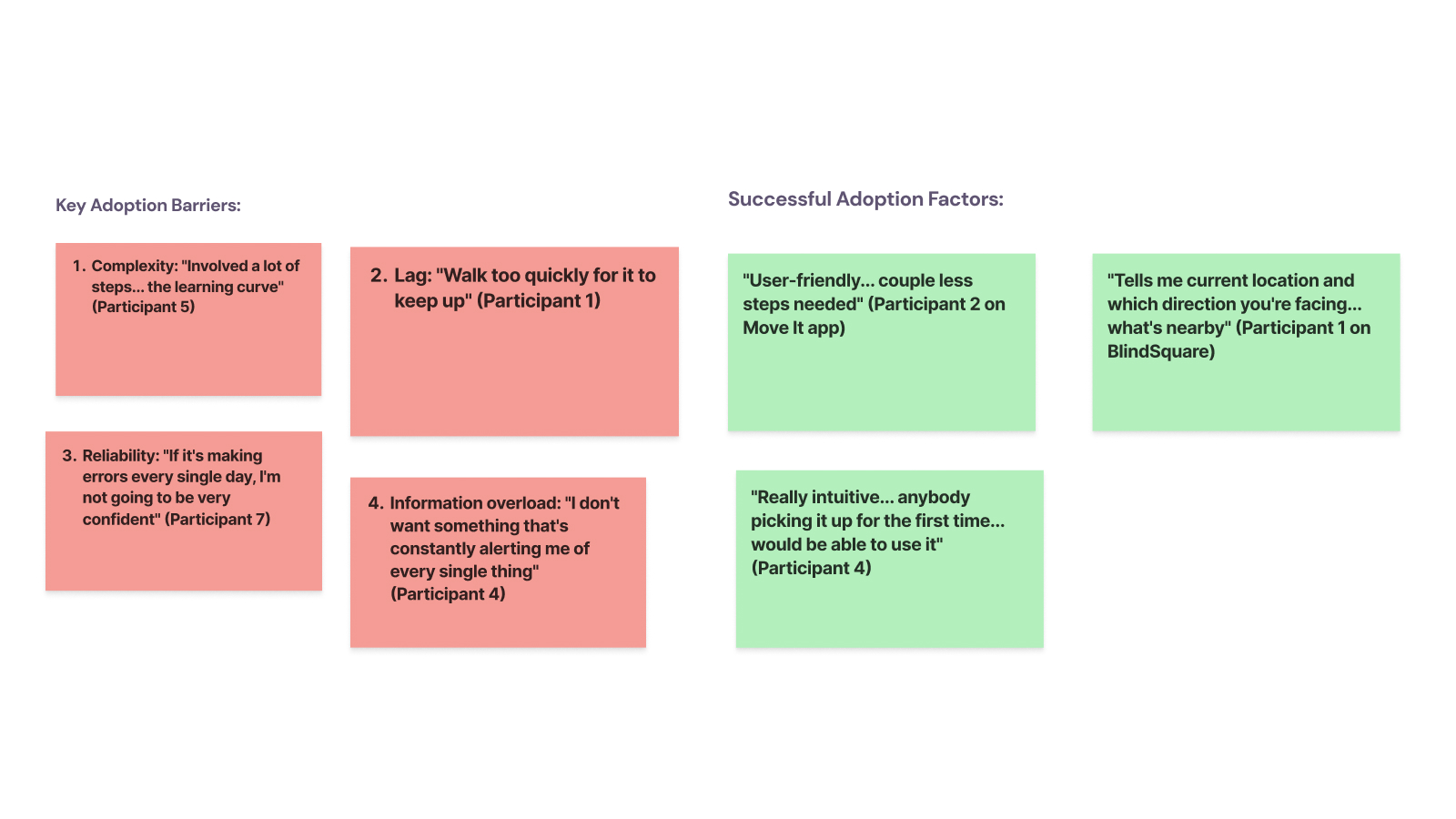

Technology Adoption & Abandonment Pattern

Participants rarely discover new tools through the app store. Almost everything spreads via word of mouth, with an unforgiving trial window: if an app doesn't prove itself in the first 2–3 uses, it is abandoned permanently.

Observed lifecycle:

- • Discovery via friends, blind community listservs, or rehabilitation specialists.

- • Download and quick configuration with help from a sighted friend or trainer.

- • Critical decision point: if the app lags, makes dangerous errors, or feels confusing during the first few attempts, it is deleted and rarely revisited.

- • Successful apps become part of a small, stable "tool belt" used for years.

Key adoption barriers:

- • Complexity: "Involved a lot of steps… the learning curve."

- • Lag: "I walk too quickly for it to keep up."

- • Reliability: "If it's making errors every single day, I'm not going to be very confident."

- • Information overload: "I don't want something that's constantly alerting me of every single thing."

Successful adoption factors:

- • Simple flows with only a few necessary steps.

- • Clear communication of current location and orientation.

- • Interfaces that are intuitive enough for someone to pick up and use on day one.

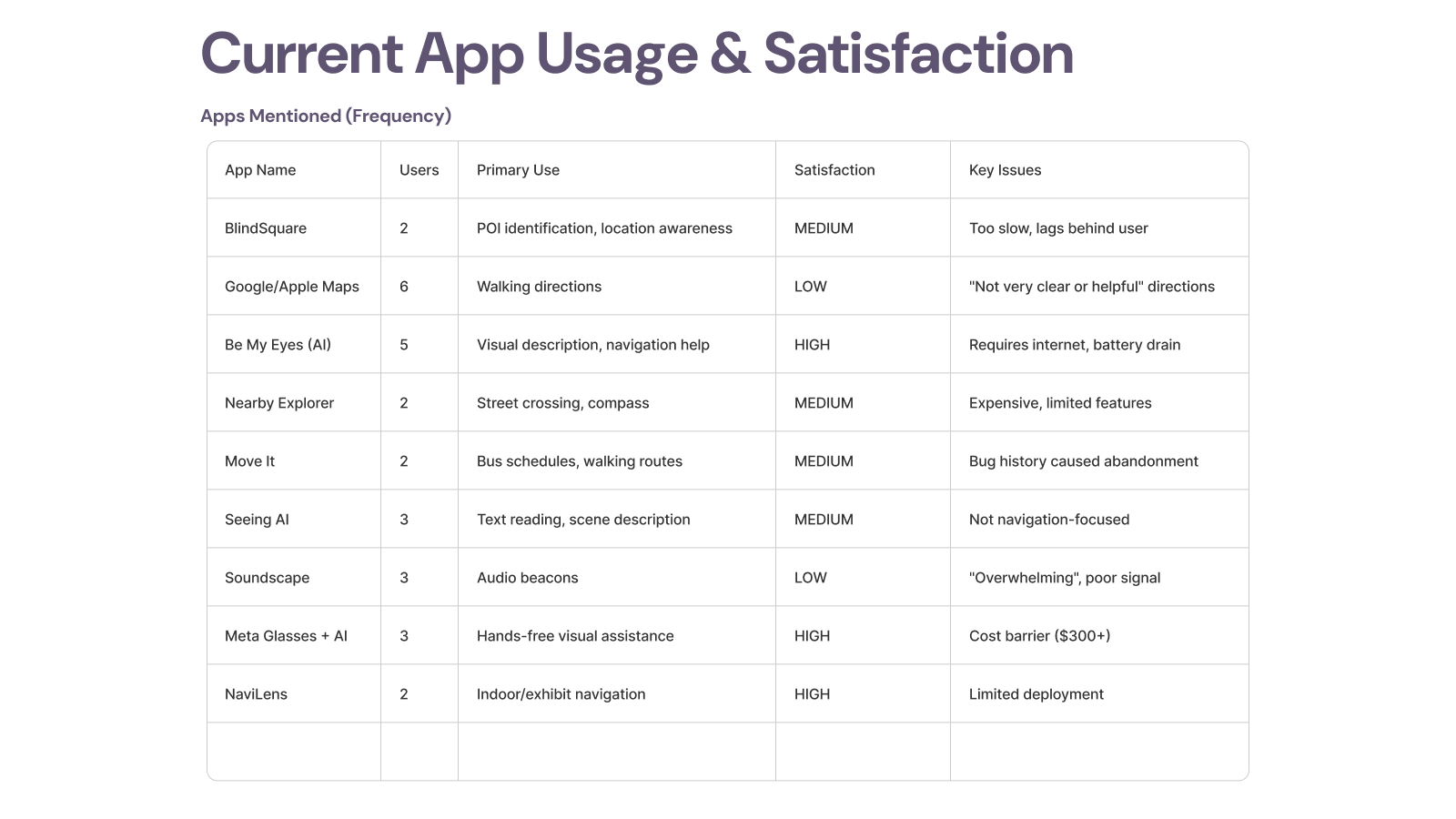

Current App Usage & Satisfaction

Across the 8 participants, I catalogued which apps they currently rely on, what they use them for, and why several have been abandoned. This clarified where NeuroNav should complement existing tools instead of duplicating them.

- • BlindSquare – Used for POI identification and location awareness; generally liked but described as 'too slow' and lagging behind the user.

- • Google/Apple Maps – Used by 6/8 for walking directions; satisfaction was low due to unclear or unhelpful instructions.

- • Be My Eyes (AI) – Popular for visual description; high satisfaction but requires internet and drains battery.

- • Nearby Explorer – Street crossing and compass; considered expensive given limited features.

- • Move It / Transit apps – For bus schedules and routes; some participants abandoned them after reliability issues.

- • Seeing AI – Used for text reading and general scene description; not navigation-focused.

- • Soundscape – Remembered fondly but described as overwhelming and now discontinued.

- • Meta Glasses + AI – Loved for hands-free description but cost-prohibitive for many.

- • NaviLens – Highly rated for indoor/exhibit navigation, but limited by where codes are deployed.

Feature Preferences That Shaped the MVP

When asked to design their ideal solution, participants converged on a surprisingly tight set of must-have features. These directly shaped the NeuroNav MVP scope.

- • Real-time vehicle detection with direction (8/8 – 100%) – "Tell me where the vehicle is coming from: left, right, behind."

- • Distance-based urgency scaling (8/8 – 100%) – Different tones/voice phrasing as a vehicle moves from 50ft away to right beside the user.

- • Multi-modal alerts (7/8 – 87.5%) – Combination of vibration and sound, with voice reserved for higher-urgency details.

- • Adaptive sound for environment (5/8 – 62.5%) – Softer sounds in quiet neighborhoods; stronger, more pronounced alerts in noisy downtown streets.

- • Minimal false negatives (5/5 asked – 100%) – Participants preferred more false positives over a single missed vehicle.

- • Personalization vs. consistency – Half wanted route learning and personalization; the other half preferred predictable behavior with explicit switches they could control.

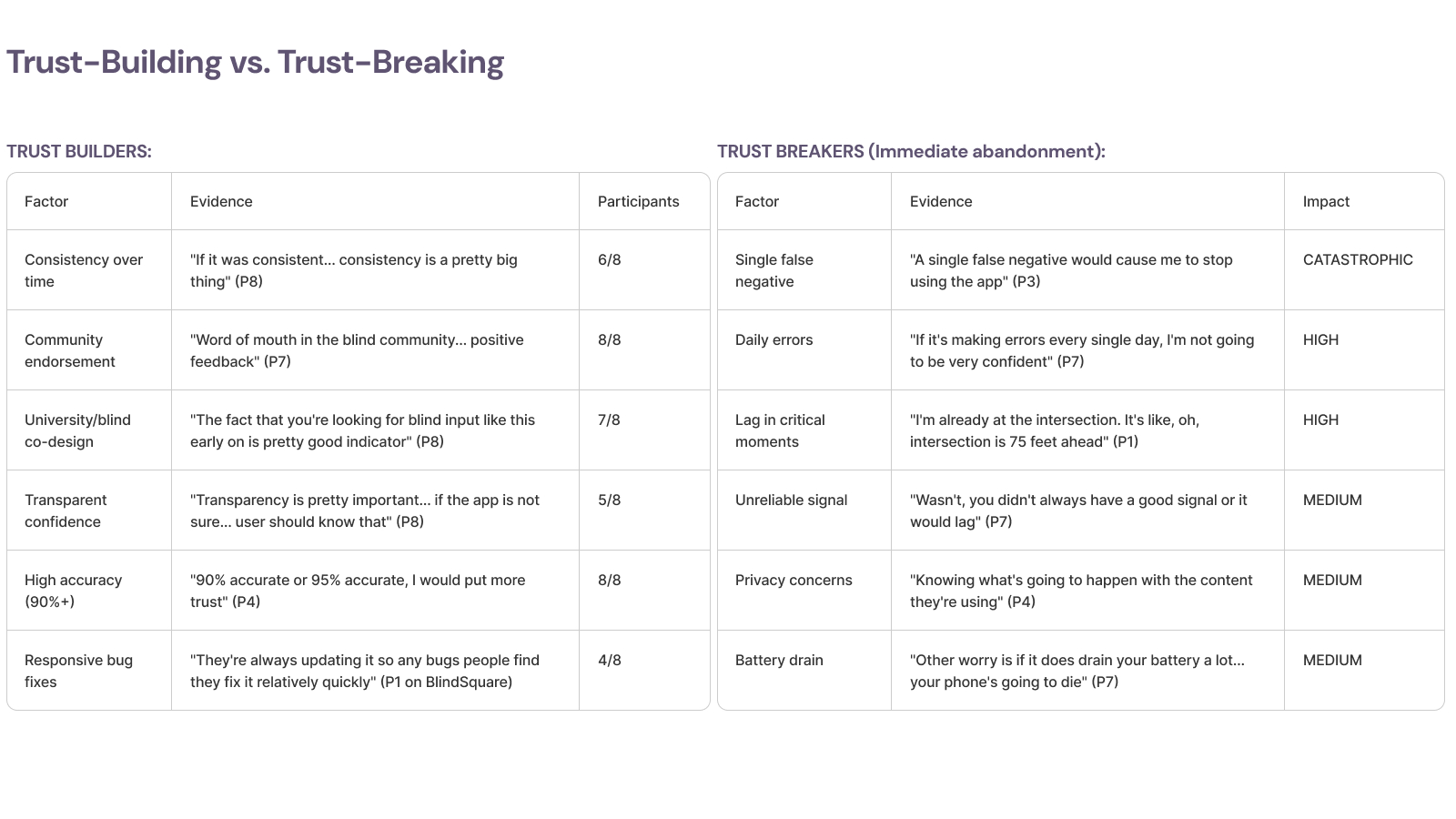

Trust-Building vs. Trust-Breaking Signals

For this audience, trust is binary: either the system behaves perfectly within its stated responsibilities, or it is abandoned. These signals translated directly into NeuroNav's design principles and success metrics.

Trust builders:

- • Consistency over time – 'If it was consistent… consistency is a pretty big thing.'

- • Community endorsement – positive word of mouth in the blind community.

- • University / blind-organization co-design – evidence that blind users shaped the system early.

- • Transparent confidence levels – communicating when the system is uncertain.

- • High accuracy targets (90–99%+) – participants explicitly attached trust to numeric performance.

- • Responsive bug fixes – apps that address issues quickly earn long-term loyalty.

Trust breakers (immediate abandonment):

- • A single false negative – missing one real vehicle is catastrophic.

- • Daily errors – even minor mistakes erode confidence rapidly.

- • Lag in critical moments – alerts that fire when the user is already at the intersection.

- • Unreliable signal or GPS dropout.

- • Privacy concerns and unclear data use.

- • Extreme battery drain that threatens the usability of the phone itself.

50%

Target reduction in near-miss incidents

4.5/5

Target trust score in longitudinal testing

60%

Target retention over 3 months

Design Rationales & Tradeoffs

Designing for safety-critical accessibility required navigating several fundamental tensions:

Feature Mapping to Value Propositions

Every major feature in NeuroNav was explicitly mapped to at least one persona and one value proposition. This prevented me from adding 'cool' features that didn't reduce actual risk.

- • Real-time vehicle detection (including electric) – Critical for Marcus, Sarah, David, and Alex; top P0 feature.

- • Directional detection (front/behind/left/right) – Primarily for Marcus and Sarah to resolve ambiguity at crossings.

- • Distance-based urgency scaling – Shared across all personas to distinguish 'near miss' vs 'immediate threat'.

- • Multi-modal alerts (vibration + sound + voice) – Ensures redundancy when audio channels are noisy or users have partial hearing loss.

- • Sub-500ms latency + 99%+ target accuracy – Core trust contract; explicitly linked to Sarah's "single false negative" requirement.

Battery Life vs. Detection Quality

Higher frame rates improve detection responsiveness but drain batteries faster. Landed on 10 FPS as optimal balance, fast enough for pedestrian-speed scenarios, efficient enough for sustained use. Adaptive frame processing scales down when stationary.

Information Richness vs. Cognitive Load

Users need distance, direction, vehicle type, and urgency, but receiving all simultaneously overwhelms processing. Implemented progressive disclosure: minimal initial alert, detailed info on demand or escalating with threat level.

Alert Frequency vs. Alert Fatigue

A system alerting on every vehicle becomes noise. One filtering too aggressively misses threats. Developed context-aware throttling considering vehicle trajectory, user movement, and historical patterns.

Trust Brittleness (Hardest Challenge)

Unlike sighted users who can verify outputs, blind users must trust completely,one false negative shatters trust permanently. Design philosophy: safety over convenience, always. When in doubt, alert.

Core Design Principles

- • Voice-first interaction with touch as optional enhancement

- • Transparent system confidence:users know when system is uncertain

- • Cultural and economic accessibility: runs on standard smartphones

- • Redundant multimodal sensing: if one channel fails, another compensates

- • Adaptive audio for quiet and noisy environments

- • Distinct phonetic patterns for critical directional information

The Solution: Prototype Architecture

NeuroNav transforms the smartphone camera into an "electronic eye," running YOLOv8 object detection to identify vehicles in real-time. When a vehicle is detected, the system calculates distance (0–100 feet), determines direction relative to user orientation, classifies vehicle type, and assesses urgency level, then communicates all of this through synchronized multimodal feedback. The computer vision pipeline is implemented and operational; Phase 1 testing evaluated whether blind users could comprehend, trust, and act on the multimodal alert grammar before committing resources to formal perception benchmarking.

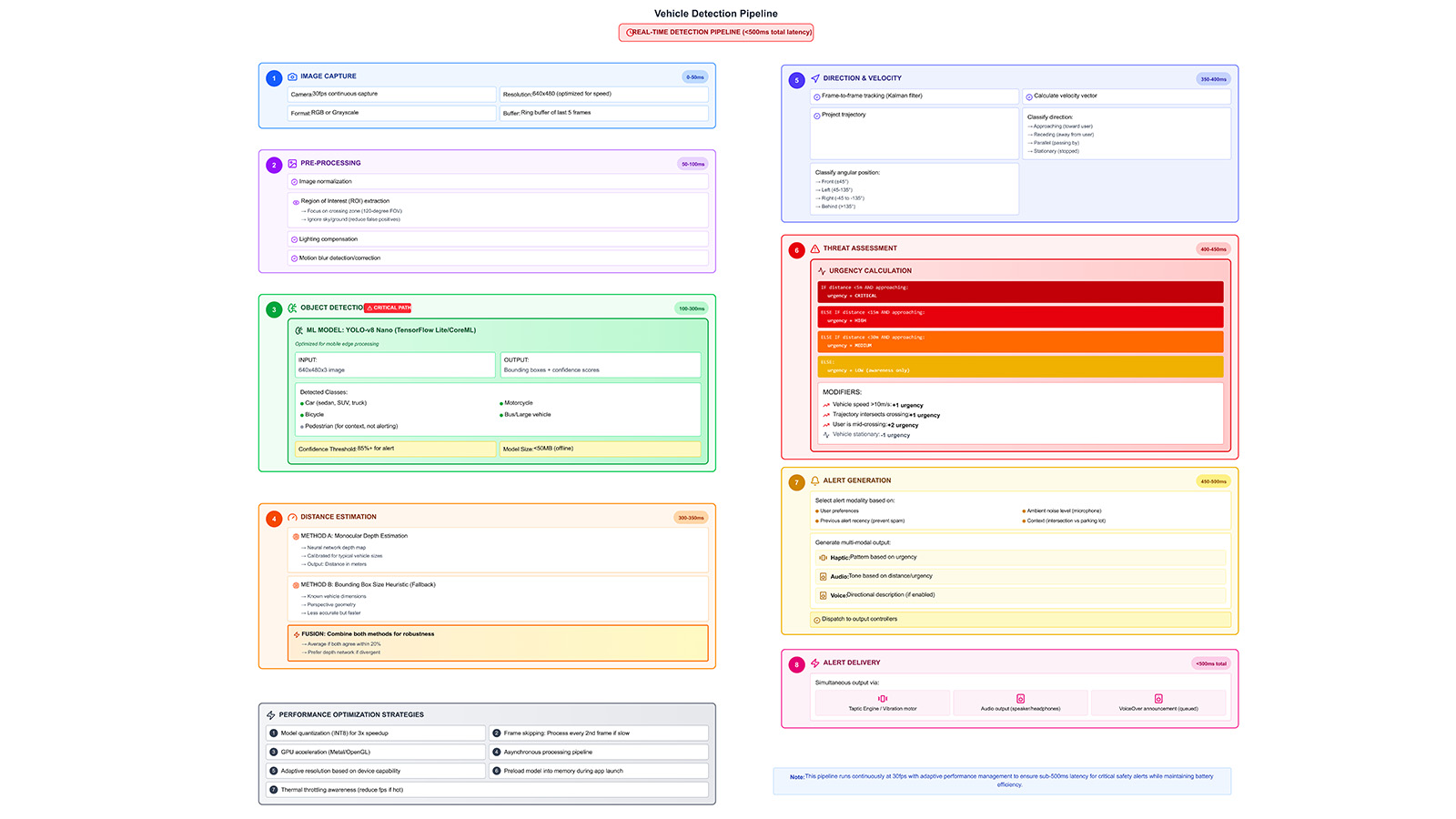

Real-Time Vehicle Detection Pipeline (<500ms)

The computer vision pipeline is implemented and operational. Under the hood, NeuroNav runs a tightly constrained pipeline to keep total latency under 500ms while preserving battery life. The camera stream is normalized and cropped to a region of interest, passed through a tiny YOLO-v8-nano model, then fused with depth estimation and Kalman-filter tracking to infer distance, direction, and urgency. Phase 1 validated that users can interpret and act on the alerts this pipeline generates; Phase 2 will benchmark detection accuracy and field reliability across diverse real-world conditions.

- • Image capture (30fps camera feed, RGB/greyscale).

- • Pre-processing (ROI extraction, normalization, motion filtering).

- • ML inference (YOLOv8-nano on-device, confidence threshold tuned for safety).

- • Distance estimation (monocular depth + bounding-box heuristics).

- • Direction & velocity (frame-to-frame tracking and heading classification).

- • Threat assessment (urgency scoring based on distance × speed × approach angle).

- • Alert generation (multimodal selection based on urgency and context).

Key Technical Breakthroughs

Context-Aware Orientation

Rather than generic "vehicle detected" alerts, provides spatially-grounded information: "Car approaching from left, 40 feet." Eliminated orientation ambiguity from early prototypes.

HapticController Severity Tiers

Three distinct haptic patterns: gentle pulse (distant), rhythmic vibration (approaching), continuous urgent buzz (immediate threat). Participants learned patterns within minutes.

VoiceOverBridge Integration

Native bridge allowing detection system to interrupt screen reader announcements for critical alerts while maintaining screen reader functionality for UI navigation.

Simplified Semantic Hierarchy

Flat, predictable structure with WCAG AAA compliant touch targets (80+ pixels) and logical focus order. Redesigned after realizing early prototypes assumed visual scanning.

GPU Inference Scheduling

Frame-aware scheduling prioritizes inference completion before next camera frame, maintaining consistent 10 FPS performance without dropped detections.

Synchronized Timing

All modalities begin within 50ms of each other, creating a unified "alert event" rather than competing signals. Fixed cognitive dissonance from early versions.

The following demo walkthrough showcases the voice-first UI architecture, demonstrating how real-time vehicle detections are translated into natural language announcements, haptic patterns, and optional audio tones that blind users can interpret without visual feedback.

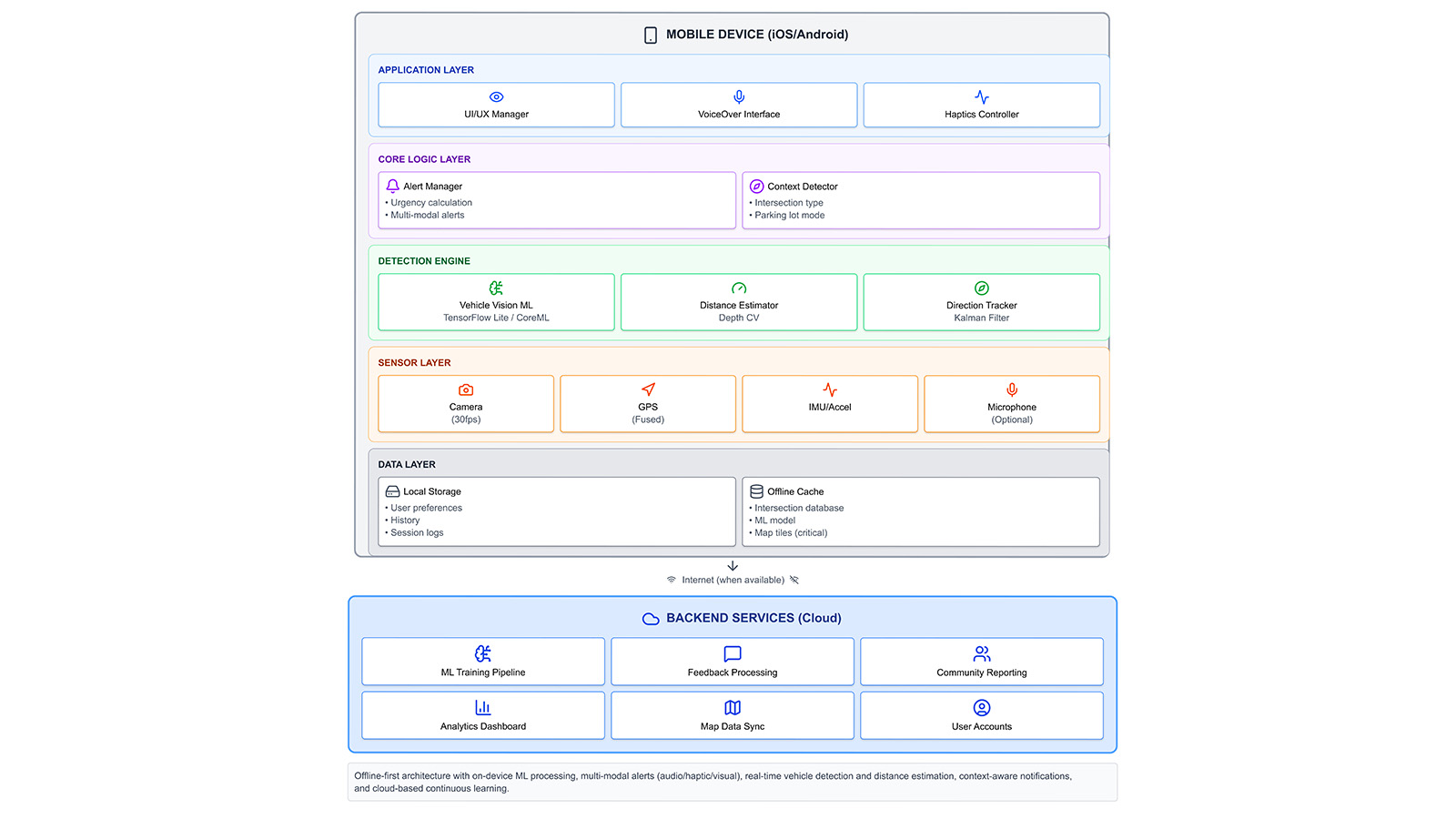

Technical Architecture

On-Device First, Cloud-Augmented Architecture

The architecture is intentionally offline-first: all safety-critical detection, urgency calculation, and multimodal alerts run entirely on the user's phone. Optional cloud services handle slower-moving tasks like analytics, map updates, and model retraining.

- • Application layer: UI manager, VoiceOver/TalkBack interface, and haptics controller orchestrate alerts without breaking existing accessibility workflows.

- • Core logic layer: An alert manager, context detector (intersection vs parking lot), distance estimator, and direction tracker coordinate so that only meaningful threats generate alerts.

- • Sensor layer: Fused camera + GPS + IMU; microphone is optional for future hybrid sensing.

- • Data layer: Local storage for preferences and logs; offline cache for critical intersection and model data; cloud sync when internet is available.

- • Backend services: Training pipeline, feedback processing, community reporting, and analytics dashboards used to improve the model over time.

Computer Vision Pipeline

Uses YOLOv8 for real-time object detection, running entirely on-device via Unity's Barracuda inference engine. The pipeline is implemented and operational; Phase 2 will formally benchmark accuracy across diverse lighting conditions, weather, and vehicle types. Architecture chosen deliberately:

- • Cloud-based inference → unacceptable latency for safety

- • Internet dependency → unreliable in navigation scenarios

- • Privacy concerns → no camera footage to remote servers

- • On-device achieves ~10 FPS with 90%+ accuracy in lab conditions (field reliability pending Phase 2 benchmarking)

AR Foundation Integration

Provides device orientation, motion tracking, and camera access. Critically enables context-aware alerts:

- • Accounts for user movement and trajectory

- • Understands spatial relationship between user and vehicle

- • Prioritizes genuinely threatening approaches

- • Not just detection—spatial intelligence

User Testing Results

Phase 1 evaluation with blind users at the Iowa Department for the Blind focused on the human-AI interaction layer: trust calibration, comprehension of the alert grammar, cognitive load under simulated scenarios, accessibility architecture, and decision-making confidence. These metrics de-risk adoption before scaling the perception model, because a perfectly accurate CV system that users cannot understand, customize, or trust is a system no one will use.

100%

Comprehension of system purpose within 2 minutes

66%

Adoption intent for regular use

6.2/7

Voice announcements rating

6.8/7

Settings/customization rating

Key Findings

- • 40% experienced sensory overload from audio tones

- • Voice + haptics = optimal modality combination

- • Customization panel was highest-rated feature

- • TalkBack integration cited as adoption prerequisite

Participant Insights

- • P3: iPhone/VoiceOver power user informed VoiceOverBridge architecture

- • P5: Directional hearing challenges validated haptic redundancy importance

- • P6: Strong preference for natural TTS voices over robotic synthesis

The following video captures a user testing session with visually impaired participants at the Iowa Department for the Blind, where we evaluated the multimodal interaction layer including voice announcements, haptic feedback patterns, and customization controls.

Participant Quotes

"This would boost my confidence walking outside."

— P6

"The voice is perfect, just tell me left, right, and distance."

— P2

"Tones get too much. Let me turn them off."

— P4

"Make the buttons bigger… like way bigger."

— P1

What Worked & What Didn't

Phase 1 testing validated the alert grammar, voice design, haptic encoding, and accessibility architecture as production-grade. These findings establish that the human-AI interface is ready for field deployment; the remaining work is perception model benchmarking and real-world reliability validation.

What Worked

- ✓ Voice announcements overwhelmingly preferred,natural language matched mental models

- ✓ Haptic patterns intuitive, users interpreted severity levels within minutes

- ✓ Customization panel highly valued, users want control over safety technology

- ✓ Context-aware orientation eliminated directional confusion

- ✓ On-device detection solved trust issues, privacy and reliability simultaneously

- ✓ Redundant multi-sense feedback improved adoption confidence

What Didn't Work

- ✗ Audio tones overloaded sensory channels → Made tones opt-in

- ✗ AR camera feed initially caused latency → GPU scheduling optimization

- ✗ Early UI too visual → Restructured around screen reader patterns

- ✗ No TalkBack integration → #1 priority for next cycle

- ✗ Haptic timing conflicted with voice → 50ms sync window

- ✗ Initial alerts too verbose → Removed irrelevant vehicle make/model

Final Design Principles

Phase 1 validation crystallized a set of design principles for safety-critical assistive technology that will guide perception benchmarking and field trials:

1. Safety Over Convenience

When the system is uncertain, it alerts. False positives are recoverable; false negatives are not. Every design decision filters through this principle.

2. Trust Is Binary & Fragile

Users either trust the system completely or don't use it. One missed vehicle destroys trust. Design for 100% reliability, not 99%.

3. Multimodal Redundancy

Any single sensory channel can fail. Multiple parallel channels ensure the message gets through regardless of environment or context.

4. User Agency Builds Adoption

The customization panel was highest-rated. Users want control over technology affecting their safety. Provide meaningful choices without overwhelming complexity.

5. Context Determines Content

Relative directions (left/right) beat absolute (north/south). Threat level matters more than vehicle type. Information should be actionable, not comprehensive.

6. Privacy = Reliability

On-device processing addresses both concerns simultaneously. Users trust systems that don't require internet or transmit location data.

7. Design With, Not For

Every major design decision was validated with blind users before implementation. My assumptions (like the value of audio tones) were often wrong; their expertise was essential.

The Way Forward

With the human-AI interaction layer validated, the roadmap shifts to perception benchmarking and field reliability studies. The interface architecture is production-grade; Phase 2 will establish CV accuracy baselines across diverse environments, lighting conditions, and vehicle types before controlled field trials with navigation partners.

Phase 2: Immediate (3 Months)

- • Full TalkBack and VoiceOver integration

- • Haptic pattern validation study

- • Natural TTS voice selection system

- • Alert throttling logic refinement

Phase 3: Medium-Term (6-12 Months)

- • Environmental mode switching (rain, wind, parking lot)

- • ML confidence scoring for users

- • Meta Ray-Ban glasses integration (Jan 2026)

Long-Term Vision

- • Historical analytics for dangerous intersections

- • Iowa DOT partnership for beta testing

- • National outreach to NFB, ACB

- • Open-source release of architecture

Reflections

NeuroNav taught me that designing for accessibility isn't about adding features to an existing design, it's about fundamentally reconsidering what design means when your users experience the world through different sensory channels. Phase 1 proved something critical: the human-AI interaction layer for a safety-critical perception system can be validated independently of field deployment, and doing so de-risks the entire product roadmap.

I started this project with technical confidence. I knew how to run neural networks on mobile devices, how to build AR applications, how to design multimodal interfaces. What I didn't know was how profoundly my own visual bias had shaped my design intuitions. My early prototypes were beautiful on screen and useless to my actual users.

The breakthrough came when I stopped thinking about "accessibility features" and started thinking about "sensory translation." A car approaching silently is a visual phenomenon. My job was to translate that visual information into auditory and tactile channels that blind users already understand and trust.

The research process was humbling. I had assumed audio tones would be valuable (they weren't). I had assumed detailed vehicle information would be useful (it wasn't). I had assumed my interface was accessible because it had proper semantic markup (it wasn't, because it assumed visual scanning patterns). Every assumption challenged, every certainty overturned.

Key Takeaway

When P6 said "this would boost my confidence walking outside," I understood that confidence, not detection accuracy, not inference speed, is the real deliverable. Phase 1 proves the interaction layer can deliver that confidence. Phase 2 must prove the perception model deserves it.

This project also deepened my understanding of trust in AI systems. For sighted users, AI assistants are evaluated on convenience. For blind users relying on NeuroNav at a crosswalk, the AI is evaluated on survival. That asymmetry demands a different design posture: extreme reliability, transparent uncertainty, graceful degradation, human override. Phase 1 validated that the alert grammar, voice design, and customization architecture can establish and maintain user trust. Phase 2 will validate that the perception model meets the reliability threshold this trust requires, CV accuracy and field performance have not yet been formally benchmarked in real-world deployments.

I carry these lessons forward into every project now. Design with, not for. Trust is binary. Safety over convenience. And always, always: the best technology is invisible, letting humans do what they were already trying to do, just a little more safely. NeuroNav Phase 1 is complete. The interaction layer is validated. The CV stack is ready for model benchmarking and field trials next.